Poisoning the Well: How Propaganda Corrupts AI

This article explores how propaganda and misinformation are quietly corrupting artificial intelligence through a process known as data poisoning. Using the recent “High Five” operation in the Philippines as a case study, it explains how AI tools like ChatGPT can be misused to spread political narratives—and how that misinformation can eventually contaminate the very AI models we rely on. A must-read for students, policymakers, and social media users who care about truth, tech, and the future of democracy.

ARTIFICIAL INTELLIGENCE (AI)CYBERSECURITY

Dr. Derek Presto

6/20/202510 min read

Imagine a village well that everyone drinks from. Now, picture a sly intruder dropping poison into that well. Little by little, the water becomes tainted, affecting anyone sipping. In the digital world, the “well” is our shared pool of information, and propaganda is the poison. As artificial intelligence (AI) systems like chatbots and search engines drink from this well—learning from the texts, posts, and articles we put online—there’s a growing danger that malicious actors are poisoning the well of data to corrupt AI’s output. In this article, we’ll explore how propaganda and misinformation can warp AI systems and why they matter to everyday Filipinos, students, policymakers, and social media users.

AI Learns From What We Post (The Good and the Bad)

Large language models (LLMs) – a fancy term for AI systems like ChatGPT that generate human-like text – learn by reading vast amounts of content from the internet. This process, known as scraping, means the AI scans millions of websites, social media posts, news articles, and more to find patterns in language. If you’ve ever wondered how ChatGPT seems to know a bit about everything, it’s because it was trained on a vast swath of the internet’s content. The trouble is that the internet includes the good, the bad, and the outright false. It’s the classic case of garbage in, garbage out: if you train an AI on biased or false information, it may spout biased or false answers.

Think of an AI model’s training data as the water in that village well. Most of it is clean, but it doesn’t take much poison to contaminate the supply. If even a small percentage of the text an AI learns from is propaganda or misinformation, it can influence the system’s outputs. This effect is sometimes described as algorithmic bias – the AI reflecting the biases or skewed truths in its training data. For example, if an AI trains on lots of content claiming a conspiracy theory is true, it might start presenting that theory as a factual answer. The AI doesn’t know truth from lie; it only knows what it was fed.

Propaganda as Poison: Two Ways It Seeps In

Propagandists – whether political spinners, troll farms, or even nation-state actors – have discovered they can exploit AI in a couple of ways:

Using AI as a Megaphone (External Misuse) is the more direct method. Actors use AI tools like ChatGPT to mass-produce propaganda content – from fake news articles to armies of social media comments – at a speed and scale humans could never match. There are already documented cases of people using AI to churn out thousands of posts and articles pushing false narratives. For instance, by instructing an AI to “write 100 different comments praising my candidate and discrediting the opponent,” propagandists can flood Facebook threads or Twitter with seemingly diverse voices all echoing the same message. AI becomes a force multiplier for their influence campaigns.

Poisoning AI’s Own Well (Internal Corruption): This method is more insidious. Instead of using AI to generate propaganda, some actors try to corrupt the AI models themselves. How? By flooding the internet with misleading content, on the hopes that the AI will scrape it up during training. Recently, researchers noted an apparent attempt by a pro-Russia network (dubbed the “Pravda network”) to do precisely this. They spread tons of pro-Russian propaganda across websites and social media, aiming to “infect” future AI models so that these models would regurgitate Kremlin-friendly narratives as if they were true. Alarmingly, some major chatbots have already been caught citing these propaganda sites in their answers. In other words, the poison is already dripping in.

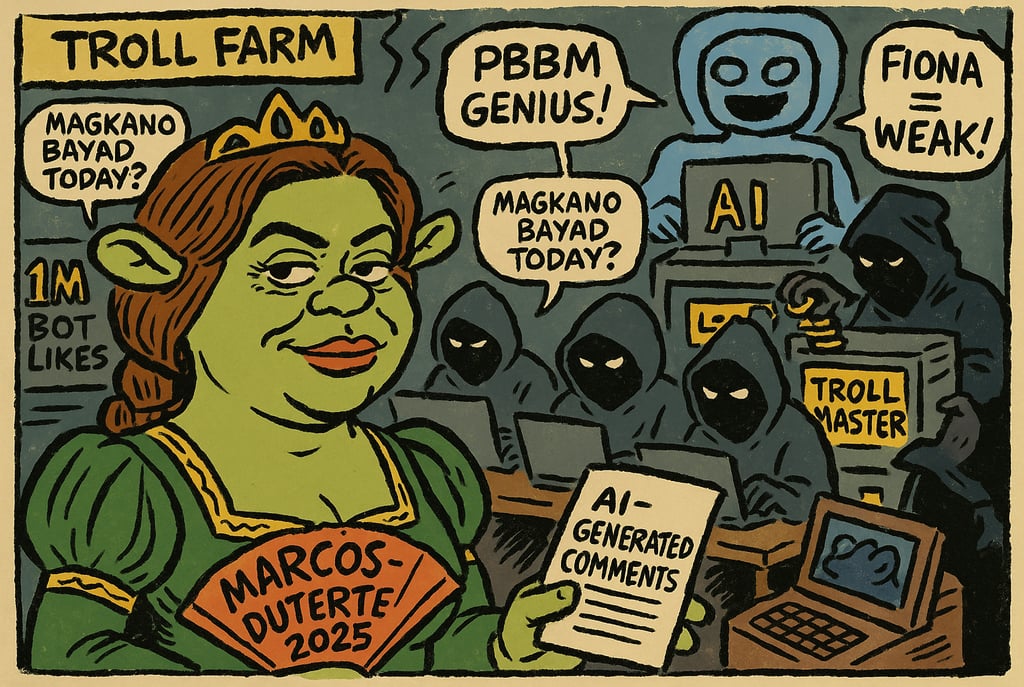

A Case Close to Home: The “High Five” Operation in the Philippines

Digital misinformation isn’t a distant problem – we’ve felt its effects here in the Philippines for years. From election season fake news to coordinated “troll farms” pushing hashtags, we know how online propaganda can shape public opinion. Now AI has entered the mix. Just this June 2025, a Philippine marketing firm got called out for using AI in a covert influence campaign.

OpenAI (the company behind ChatGPT) reported that it shut down a network of ChatGPT accounts originating from the Philippines that were being misused to generate political propaganda. These accounts, linked to a local PR agency called Comm&Sense Inc., had been using ChatGPT to pump out short, catchy comments in English and Filipino praising President Ferdinand “Bongbong” Marcos Jr. and his policies. Many of these AI-generated comments were only a few words long – brief but highly partisan. They’d applaud the President (“Great move by PBBM!”) or smear his critics (even calling the Vice President “Princess Fiona,” a mocking meme reference). Then, the team behind this operation would post these comments across TikTok and Facebook using fake accounts, creating the illusion of grassroots support.

OpenAI’s investigators nicknamed this the “High Five” operation. Why High Five? Because the propagandists ran five dummy TikTok channels filled with cloned content, often using the same videos with different captions, and the comments they generated were riddled with emojis (those little hand-clap and thumbs-up icons – a digital high five). It was a sly campaign to make one side of a political narrative seem overwhelmingly popular online. But it wasn’t dozens of real Filipinos spontaneously cheering on the President – it was a handful of operators and an AI writing their cheers for them.

OpenAI banned the offending accounts and exposed the scheme in a public report on June 5, 2025. It’s important to note that this was not a case of hackers breaking into ChatGPT or “poisoning” the AI’s core system. The AI (ChatGPT) itself wasn’t changed or reprogrammed. Instead, **it was misused as a tool to generate content, like using a printer to publish fake pamphlets. The human operators fed ChatGPT prompts, and it dutifully generated propaganda text for them. They essentially had an AI ghostwriter for fake pro-government commentary.

While this network was uncovered and stopped, it illustrates a clear and present danger: AI can turbocharge local propaganda efforts. What used to require dozens of paid trolls working long hours can now be done by a few people with a clever script and an AI assistant. For Filipino social media users, this means we must be more vigilant than ever – that impassioned comment you see on a news post might not be from a real kabayan, even if it has a Filipino name and profile picture. It could be generated by AI and deployed in an influence operation.

Why This Matters: When AI Eats Its Poison

You might think, “Alright, some bad actors use AI to generate fake posts. Annoying, but if we catch and delete them, problem solved, right?” The bigger problem lies in the second method of poisoning the well – the long game of saturating the internet with AI-generated propaganda that future AIs will learn from. It’s like climate change for information: the effects accumulate over time and are harder to reverse.

Consider this: now that High Five’s content is out there (some posts may still linger online), it becomes part of the data pool that the next generation of AI might scoop up. OpenAI’s filters tightly control today’s ChatGPT, but many AI models are open-source or less strictly curated. Developers often train these models on large dumps of internet text that are available publicly. Suppose that training data includes all those fake pro-Marcos comments and AI-generated posts. In that case, the new model won’t know they were part of a propaganda campaign – it will treat them as just another example of human opinion. Over time, the model might start echoing those viewpoints or citing those fabricated “facts,” effectively poisoned by the last generation of AI’s output.

This isn’t a hypothetical scare story – researchers are already warning about this feedback loop. As AI-generated content fills more of the internet, it risks corrupting the training data of future models. One Scientific American article dubbed it an “AI ouroboros” – the snake eating its tail. When AI eats AI’s leftovers, errors and biases compound. Imagine repeatedly photocopying a document, then photocopying the copy; eventually, the text blurs. Similarly, if AI models keep learning from the outputs of previous models, the inaccuracies snowball. Studies show that even a small percentage of AI-written text in a training set can degrade a model’s performance, a phenomenon called model collapse. In plain terms, the AI makes gibberish or biased statements because it’s been trained on polluted data.

For an everyday user, this could mean a future where you ask a question and the AI’s fluent answer is subtly warped by propaganda injected into its training corpus. For example, a student researching martial law era history might get an answer that sounds authoritative but is slanted by revisionist narratives (because numerous blog posts were whitewashing the dictatorship somewhere in their training data). Or a curious citizen asking “What do Filipinos think about issue X?” might receive a reply skewed toward the viewpoint the propagandists wanted to amplify.

Keeping the Well Clean: What We Can Do

The situation sounds dire, but awareness is the first step toward a solution. Just as communities can come together to protect a water source, we can take action to protect our information ecosystem from being poisoned by disinformation. Here are a few ways forward:

AI Developers and Companies: Just as OpenAI did by detecting and banning the High Five network, AI companies need to monitor and guard against malicious use of their tools actively. More importantly, they must filter and curate the training data for their models, weeding out known propaganda sources. If an AI’s “diet” is cleansed of blatant falsehoods, it’s less likely to spit out toxic answers. This might mean refusing to ingest content from sites known for spreading disinformation or giving extra weight to trustworthy sources. It’s a cat-and-mouse game (propagandists will try to sneak in poison, AI researchers have to catch it), but it’s critical. As one expert put it, AI makers should “ensure their models avoid known foreign disinformation”– don’t drink from a tainted well.

Policymakers and Educators: Digital literacy is as vital as ever. We need programs to teach students and the public how to spot AI-generated content and misinformation. If people know the well might be poisoned, they’ll think twice before trusting every drop. Lawmakers can support initiatives in schools and communities to improve media literacy, making citizens less likely to be fooled by synthetic propaganda. Additionally, policies or guidelines for transparency in AI could help, for example, by requiring AI-generated content to be labeled or mandating that companies document the sources used to train AI models (a kind of “nutrition label” for AI). While regulation must be careful not to stifle innovation, ensuring AI doesn’t become a superspreader of lies is a matter of public interest.

Social Media Platforms: Much of the propaganda is on Facebook, Twitter (X), TikTok, YouTube, etc. These companies should invest in detecting coordinated fake accounts and AI-generated posts. Some red flags (like dozens of accounts posting identical text, or new accounts pumping out high volumes of political content) can be caught by algorithms or attentive moderators. When a campaign is identified, like the one run by Comm&Sense Inc., platforms should remove or flag the content and ideally alert users who might have been exposed. Closer collaboration between AI firms and social media could also help; for instance, OpenAI knew which accounts were abusing ChatGPT, and that intel could help Facebook or TikTok purge those accounts from their services.

All of Us (Everyday Internet Users): We keep the well clean. Be skeptical of what you read online, especially if it triggers a strong emotional reaction. That viral post praising a politician to the high heavens or demonizing someone’s opponent – pause and double-check it. Does the account look real? Is the language oddly generic or repetitive? It might be part of a manufactured campaign. Please think before you share, because sharing is drawing water from the well and passing it to others. If you suspect something is AI-generated propaganda, call it out or report it. When using AI tools yourself (say, asking ChatGPT for info), remember that it might not have the whole picture. Use AI as a starting point, not the sole source of truth. Verify its answers from reliable, human-vetted sources regarding essential topics.

A Shared Responsibility

In Filipino culture, we value clarity of thought. But in today’s digital world, even clean water can be clouded by just a drop of dirt. The same goes for information. A single stream of propaganda or misinformation can pollute how we think, decide, and act. As AI becomes more embedded in how we learn and create, we must all take responsibility for the quality of the information these systems learn from.

The fight against propaganda is not new to the Philippines. We’ve witnessed how misinformation can sow division, undermine public trust, and even endanger lives. With AI in the picture, the challenge has scaled up but so have our tools to combat it. Just as AI can be used to spread disinformation, AI can also help detect deepfakes, flag troll comments, and shine a light on influence operations. It’s a race between those who seek to pollute the well and those who strive to purify it.

In the end, preserving the integrity of our shared digital well is a collective effort. Stay informed and vigilant whether you’re a student researching online, a policymaker crafting tech regulation, or just a social media user scrolling through the news feed. Think of yourself as a guardian of that well. If you notice a strange taste in the water – an article that seems fishy, a post that feels too perfect – don’t just consume it unquestioningly. Question it, research it, discuss it. Doing so, you’re helping ensure that the next person who comes to drink from the well gets clarity and truth, not poison.

Ultimately, AI promises to help us solve complex problems and access knowledge at scale. That promise can only be fulfilled if we safeguard the knowledge well from contamination. Let’s work together to keep our water clean – for us and the intelligent machines we’re teaching to drink from it. Our democracy, discourse, and the next generation’s understanding of reality depend on it.

References:

Biddle, L. (2024, August 20). Feedback loops and model contamination: The AI ouroboros crisis. Sease Blog. Retrieved from https://sease.io/… blogs.nottingham.ac.uksease.io+1medium.com+1

Brandom, R. (2024, May 28). AI-generated data can poison future AI models. Scientific American. Retrieved from https://www.scientificamerican.com/article/ai-generated-data-can-poison-future-ai-models/ scientificamerican.com

GameOPS. (2025, June 10). OpenAI shuts down the pro-Marcos campaign in the Philippines. Retrieved from https://www.gameops.net/… bitpinas.com+4gameops.net+4facebook.com+4

Middleton, B. (2024, November 19). Ouroboros of AI: The peril of generative models feeding on their creations. Medium. Retrieved from https://medium.com/@gillesdeperetti/ouroboros-of-ai-the-peril-of-generative-models-feeding-on-their-creations‐bda62e47ac5f0 economictimes.indiatimes.com+4medium.com+4bmiddleton1.substack.com+4

Philippine Daily Inquirer. (2025, June 10). OpenAI bans PH users behind pro-gov’t drive. Inquirer Technology. Retrieved from https://technology.inquirer.net/142186/openai-bans-ph-users-behind-pro-govt-drive technology.inquirer.net+1explained.ph+1

SCMP. (2025, June 10). Pro-Marcos Jnr Philippines-originated ChatGPT accounts banned over AI misuse. South China Morning Post. Retrieved from https://www.scmp.com/week-asia/politics/article/3313882/pro-marcos-jnr-philippines-originated-chatgpt-accounts-banned-over-ai-misuse scmp.com

VentureBeat. (2023, May). The AI feedback loop: Researchers warn of “model collapse”. Retrieved from https://venturebeat.com/ai/the-ai-feedback-loop-researchers-warn-of-model-collapse-as‑ai-trains-on‑ai-generated-content/ scientificamerican.com+4venturebeat.com+4medium.com+4

Contact

contact@bathalasolutions.com